Abstract:

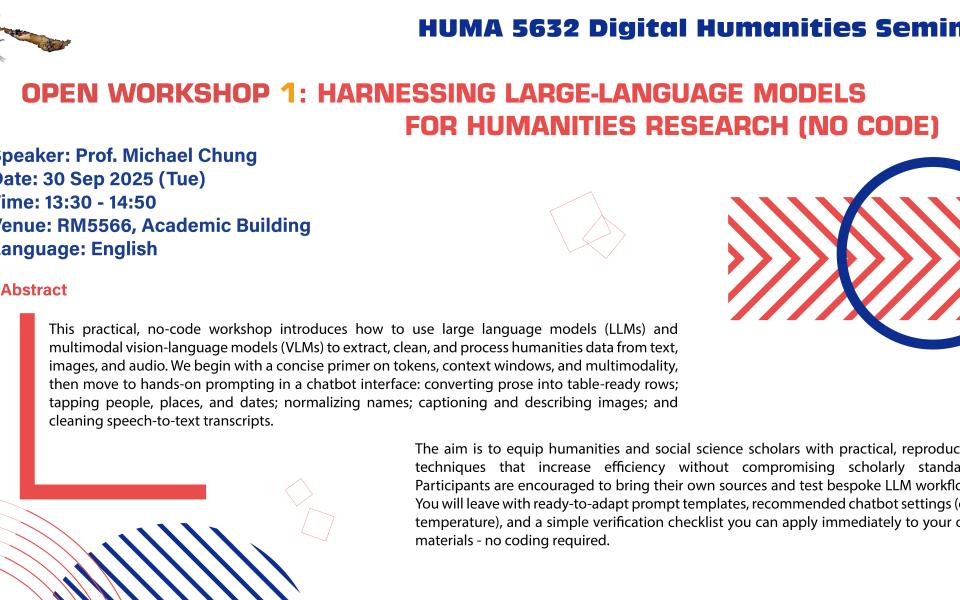

This practical, no-code workshop introduces how to use large language models (LLMs) and multimodal vision-language models (VLMs) to extract, clean, and process humanities data from text, images, and audio. We begin with a concise primer on tokens, context windows, and multimodality, then move to hands-on prompting in a chatbot interface: converting prose into table-ready rows; tagging people, places, and dates; normalizing names; captioning and describing images; and cleaning speech-to-text transcripts.

The aim is to equip humanities and social science scholars with practical, reproducible techniques that increase efficiency without compromising scholarly standards. Participants are encouraged to bring their own sources and test bespoke LLM workflows. You will leave with ready-to-adapt prompt templates, recommended chatbot settings (e.g., temperature), and a simple verification checklist you can apply immediately to your own materials—no coding required.