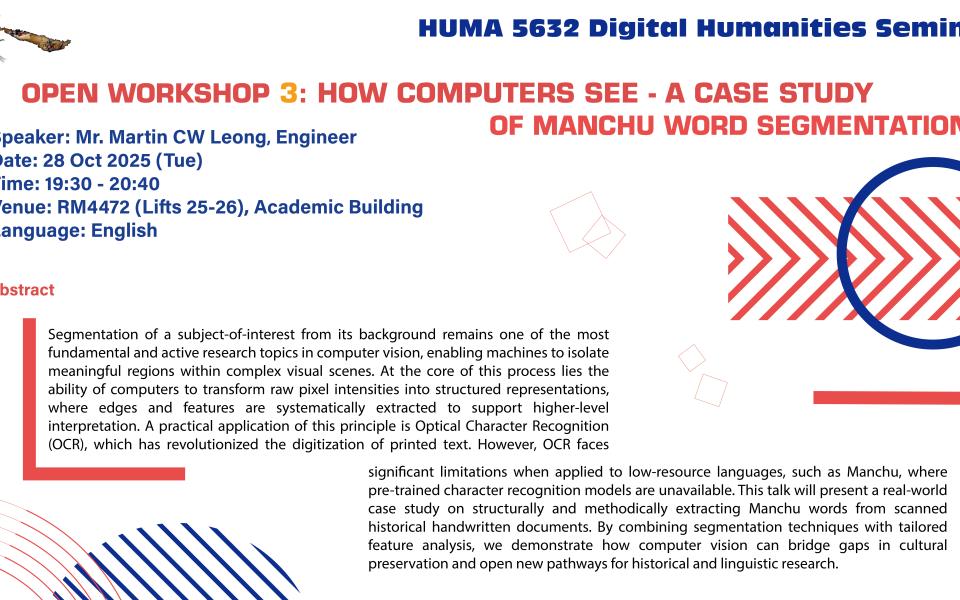

Abstract

Segmentation of a subject-of-interest from its background remains one of the most fundamental and active research topics in computer vision, enabling machines to isolate meaningful regions within complex visual scenes. At the core of this process lies the ability of computers to transform raw pixel intensities into structured representations, where edges and features are systematically extracted to support higher-level interpretation. A practical application of this principle is Optical Character Recognition (OCR), which has revolutionized the digitization of printed text. However, OCR faces significant limitations when applied to low-resource languages, such as Manchu, where pre-trained character recognition models are unavailable. This talk will present a real-world case study on structurally and methodically extracting Manchu words from scanned historical handwritten documents. By combining segmentation techniques with tailored feature analysis, we demonstrate how computer vision can bridge gaps in cultural preservation and open new pathways for historical and linguistic research.