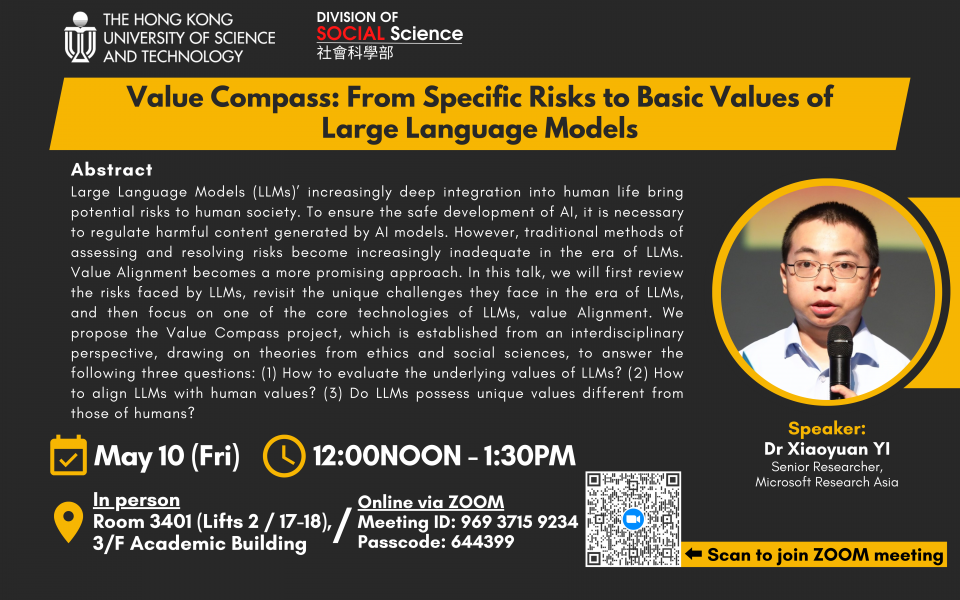

Large Language Models (LLMs)’ increasingly deep integration into human life bring potential risks to human society. To ensure the safe development of AI, it is necessary to regulate harmful content generated by AI models. However, traditional methods of assessing and resolving risks become increasingly inadequate in the era of LLMs. Value Alignment becomes a more promising approach. In this talk, we will first review the risks faced by LLMs, revisit the unique challenges they face in the era of LLMs, and then focus on one of the core technologies of LLMs, value Alignment. We propose the Value Compass project, which is established from an interdisciplinary perspective, drawing on theories from ethics and social sciences, to answer the following three questions: (1) How to evaluate the underlying values of LLMs? (2) How to align LLMs with human values? (3) Do LLMs possess unique values different from those of humans?

Xiaoyuan Yi, Senior Researcher at Microsoft Research Asia. He obtained his bachelor’s and doctorate degrees in computer science from Tsinghua University, mainly engaged in Natural Language Generation (NLG) and Societal AI research, and published 30+ papers and received 1k+ citations in Google Scholar. He developed the most famous AI poetry generation systems, Jiuge (九歌), which has been used by tens of millions of users from 100+ countries. He has won honors such as the Tsinghua University Supreme Scholarship (10 winners annually at THU), The 10 Most Influential People on the Internet by Xinhua Net (10 winners annually in China), Siebel Scholar (100 winners annually worldwide), the Best Paper Award and the Best Demo Award (twice) of the Chinese Conference on Computational Linguistics, Rising Star Award of IJCAI Young Elite Symposium (3 winners annually in China), China Computer Federation Outstanding Doctoral Dissertation Award (10 winners annually in China), etc.